GPU AdaBoost

Aim of this project is to create GPU-accelerated AdaBoost image recognition system. The current version is working just with a single image frame and contains lot of testing and validation code to provide information about solution accuracy.

status: functional, yet unoptimized

language: C++

os: os independent

Hardware requirements and limitations

Current version requires shader model 2a (convolution shaders contain some loops so it really requires shader model 3, but the loops could be easily unrolled), support for framebuffer objects (FBO's) and floating-point textures and render targets. Non-power-of-two textures may come in handy when processing non-power-of-two images (like 640x480 vga camera feed, etc ...) is required.

Processing performance will benefit from:

- convolutions with power-of-two sizes or their multiples

- convolutions with aspect ratios close to 1 (squares are ideal)

- less weak classifiers (more classifiers = more texture samples, bigger shaders -> texture & program cache misses)

- smaller features (3x3 is ok, anything above 4x4 could cause trouble; not implemented right now)

Status update

A new version (v0.5) of this crazy adabooster was released. It still takes just static image as input but it is now capable of realtime (well, there are still optimizations to make) face recognition. Tests with 256x256 texture gave framerates arround 25FPS (that's 40msec per frame). Running at 512x512 isn't so glorious at the moment, it runs at 7FPS only. But that's proportional to image size which is good news because it means there are no significant texture cache misses with greater image size. Note this works with non-power-of-two images just as well. Also note there's no source image scaling or rotation so it is going to detect well oriented and sized faces only. There's neither output interleaving so it could run even faster that way.

Anyway, demo is available for download at the bottom of the page, it contains a lots of diagnostical ballast, it's output should look like this:

=== Checking OpenGL support ===

OpenGL 1.2

OpenGL 1.3

OpenGL 1.4

OpenGL 1.5

OpenGL 2.0

OpenGL 2.1

GL_ARB_fragment_shader

GL_EXT_framebuffer_object

=== Loading XML classifier ===

AdaBoost classifier 'final classifier'

160 weak classifiers

=== Convolution list dump ===

convolution(block-width: 1, block-height: 1)

convolution(block-width: 2, block-height: 2)

convolution(block-width: 2, block-height: 4)

convolution(block-width: 4, block-height: 2)

=== Load test image ===

done

=== Create convolution plan ===

convolution precision is 16 bits

warning: rasterizer calibration using GL_ARB_fragment_program

warning: render to 3D texture via texture copy (expect less than a half of potential speed)

shader_params(type: 'pack', offset-x: 0 offset-y: 0, mip-level: 0, samples-x:1, samples-y: 1, slice: 0)

shader_params(type: 'pack', offset-x: 0 offset-y: 0, mip-level: 1, samples-x:1, samples-y: 1, slice: 0)

shader_params(type: 'conv', offset-x: 1 offset-y: 0, mip-level: 0, samples-x:2, samples-y: 2, slice: 1)

shader_params(type: 'conv', offset-x: 0 offset-y: 1, mip-level: 0, samples-x:2, samples-y: 2, slice: 2)

shader_params(type: 'conv', offset-x: 1 offset-y: 1, mip-level: 0, samples-x:2, samples-y: 2, slice: 3)

shader_params(type: 'conv', offset-x: 0 offset-y: 0, mip-level: 1, samples-x:1, samples-y: 2, slice: 0)

shader_params(type: 'conv', offset-x: 1 offset-y: 0, mip-level: 0, samples-x:2, samples-y: 4, slice: 1)

shader_params(type: 'conv', offset-x: 0 offset-y: 1, mip-level: 0, samples-x:2, samples-y: 4, slice: 2)

shader_params(type: 'conv', offset-x: 1 offset-y: 1, mip-level: 0, samples-x:2, samples-y: 4, slice: 3)

shader_params(type: 'conv', offset-x: 0 offset-y: 1, mip-level: 1, samples-x:1, samples-y: 2, slice: 4)

shader_params(type: 'conv', offset-x: 1 offset-y: 2, mip-level: 0, samples-x:2, samples-y: 4, slice: 5)

shader_params(type: 'conv', offset-x: 0 offset-y: 3, mip-level: 0, samples-x:2, samples-y: 4, slice: 6)

shader_params(type: 'conv', offset-x: 1 offset-y: 3, mip-level: 0, samples-x:2, samples-y: 4, slice: 7)

shader_params(type: 'conv', offset-x: 0 offset-y: 0, mip-level: 1, samples-x:2, samples-y: 1, slice: 0)

shader_params(type: 'conv', offset-x: 1 offset-y: 0, mip-level: 0, samples-x:4, samples-y: 2, slice: 1)

shader_params(type: 'conv', offset-x: 1 offset-y: 0, mip-level: 1, samples-x:2, samples-y: 1, slice: 2)

shader_params(type: 'conv', offset-x: 3 offset-y: 0, mip-level: 0, samples-x:4, samples-y: 2, slice: 3)

shader_params(type: 'conv', offset-x: 0 offset-y: 1, mip-level: 0, samples-x:4, samples-y: 2, slice: 4)

shader_params(type: 'conv', offset-x: 1 offset-y: 1, mip-level: 0, samples-x:4, samples-y: 2, slice: 5)

shader_params(type: 'conv', offset-x: 2 offset-y: 1, mip-level: 0, samples-x:4, samples-y: 2, slice: 6)

shader_params(type: 'conv', offset-x: 3 offset-y: 1, mip-level: 0, samples-x:4, samples-y: 2, slice: 7)

done

=== Validate convolution ===

done; convolutions of test image come out precise enough (maximal

difference is 1, standard deviation is 0.36449; in 0 - 255 scale)

=== Create AdaBoost plan (it takes a while to compile shaders) ===

done

=== Validate shader synthesis ===

there are 3 free texturing units and 8 texcoords

group size limit is 24 classifiers (24 was ok with 7950GT)

there are 22 classifier groups by mask and texture matching

have 9 classifiers using 1 convolution textures in group 0. probing ...

have 8 classifiers using 1 convolution textures in group 1. probing ...

have 8 classifiers using 1 convolution textures in group 2. probing ...

have 11 classifiers using 1 convolution textures in group 3. probing ...

have 9 classifiers using 1 convolution textures in group 4. probing ...

have 8 classifiers using 1 convolution textures in group 5. probing ...

have 9 classifiers using 1 convolution textures in group 6. probing ...

have 8 classifiers using 1 convolution textures in group 7. probing ...

have 8 classifiers using 1 convolution textures in group 8. probing ...

have 3 classifiers using 1 convolution textures in group 9. probing ...

have 10 classifiers using 2 convolution textures in group 10. probing ...

have 9 classifiers using 2 convolution textures in group 11. probing ...

have 8 classifiers using 2 convolution textures in group 12. probing ...

have 9 classifiers using 1 convolution textures in group 13. probing ...

have 9 classifiers using 2 convolution textures in group 14. probing ...

have 5 classifiers using 1 convolution textures in group 15. probing ...

have 4 classifiers using 1 convolution textures in group 16. probing ...

have 6 classifiers using 1 convolution textures in group 17. probing ...

have 1 classifiers using 1 convolution textures in group 18. probing ...

have 6 classifiers using 1 convolution textures in group 19. probing ...

have 9 classifiers using 1 convolution textures in group 20. probing ...

have 3 classifiers using 1 convolution textures in group 21. probing ...

results are well within limits

=== Validate classifier inputs ===

done; classifier sampling of test image come out precise enough (maximal

difference is 1, standard deviation is 0.267887; in 0 - 255 scale)

=== Validate convolution slice matching ===

convolution slice matching is well within limits

=== Validate feature sampling ===

feature grid sampling is well within limits

=== Calculate test image convolutions ===

done

running at 193.598 frames/sec (0.00516533 frame time)

=== Run AdaBoost on test image ===

precaching shaders ... go!

done

running at 25.0458 frames/sec (0.0399269 frame time)

ok ... no OpenGL erorrs occured

now you can switch between original image and different kernels using

'b' and 'n' or between different shifts of kernel using 'c' and 'v'

It was tested on GeForce 6600GT, 7950GT and Quadro NVS 140M (minor precision issues with this one) graphics cards and in case you want to compile it, you're going to need UberLame framework r17. Please don't complain if it doesn't work on your machine, i'm still having nightmares from debugging this.

Possible future direction would be shader optimization: there are very similar blocks of code which would maybe better be in function, but i need to see if having smaller shaders overweights cost of function calls. Then there's a lot of spatial coherency in texture sampling and it can sure be optimized, it should knock down size of shaders a bit as well. And finaly there's preliminary cutoff for pixels where classifier oputput is so negative in early stages of classification that they couldn't possibly turn into face pixels and don't require further processing. And of course, there should be nice demo, crunching avi files / camera streams.

version history

| version | changes from previous version |

|---|---|

| v0 | - |

| v0.5 | realtime AB completely on GPU |

How does that work?

The program loads set of weak classifiers from an xml file (kindly provided by Michal Hradis). Each classifier evaluates one LRD feature. To do that, it's necessary to calculate rectangular grid of convolutions, get two convolution results from positions given by classifier, compare with other convolutions and based on outcome select one of alpha values which then becomes classifier output.

This is fairly easy task, however on GPU it has to be split to several stages, not entirely without caveats.

First stage is calculation of image convolutions (they're windowed image sums actually). There is set of different convolution kernels and set of temporary textures which are filled with image convolutions.

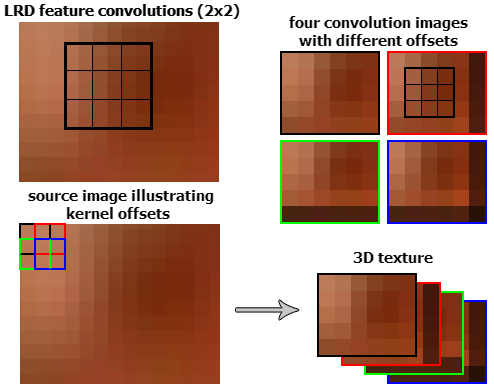

Because to evaluate LRD feature it's necessary to take grid of samples (typically 3x3 or 4x4) and there's lot of classifiers (160 in the testing set), it's very texture-sampling intensive. On GPU the texture sampling cost is more proportional to number of samples taken and their spatial coherency rather than to amount of data transferred. Therefore a simple optimization was introduced: greyscale pixels of convolution textures are packed to RGBA quadruples. Then the shader is able to fetch up to four convolution values (ie. one line of the grid) in just one or two texture samples (being three or six, ie. average four and a half instead of nine for the whole 3x3 grid or instead of sixteen for 4x4 grid respectively). This however requires different kernel shifts to be packed to different textures as shown on the following image:

As seen on top-left image, there's some LRD feature. To evaluate it, nine convolution values are needed (each convolution being sum of four pixels in one field of the grid). If convolved image was used, these convolution values would be one pixel apart from each other, therefore not suitable for efficiently packing coinciding pixels to horizontal quadruples because half of pixels in each quadruple would not be useful for the calculation of this particular feature.

To fight this problem, several smaller interleaved convolutions are calculated instead, each with different image shift. Kernels for first pixel of each of our four convolutions are shown on bottom-left image.

On top-right image there are these convolutions, with the feature seen on top-left image marked. It can now be seen that the pixels necessary to evaluate the feature are now coincident.

Bottom-right image shows how the convolutions are stored in 3D texture in GPU. Note the images are monochromatic, use of colour images here has solely aesthetical reasons.

Calculating convolutions seemed to be trivial task, but unfortanately there were sub-pixel artifacts which seriously ravaged result precission. It was discovered that the texture coordinates passed to OpenGL are not equal to coordinates in corner pixels of resulting raster as some might expect. This was adressed by writing simple shader that outputs texture coordinates it gets to floating-point texture, these are read back to CPU and original coordinates are adjusted so the shader would get the right coordinates (ie. the rasterizer calibration).

Next issue came up when rendering directly to 3D texture layers, which is impossible on NVidia cards (nasty bug?) up to GeForce 8800 which finaly addresses the problem. That way it's necessary to copy texture data, which sadly takes up about half of whole convolution calculation time.

Next stage is classifier evaluation itself. It requires reading proper convolution values, comparing them and selecting result (alpha) from the table. Reading convolution values is a bit tricky because first the proper convolution 3D texture slice must be chosen and then the sampled pixels must be unpacked. The first step does not require branching, but the other does. Fortunately it's easy to move branching from shader to rasterizer using polygon stipple and multipass (four pass as there are four possible unpacking branches) rendering.

Classifiers should be packed to minimal number of long shaders so the whole process do not take more than say ten rendering passes. The current demo can pack it's 160 classifiers to 22 shaders, each of them has four rendering passes. Theoretically, it's possible to pack 24 classifiers to a single pass (constant / temporary register limit), but each classifier needs different texcoords and we've got 8 texcoords only. It would be possible to move texcoord interpolation to pixel shader, but that would add unnecessary overhead, 22 x 4 rendering passes isn't really so much. Moreover, having less classifiers in a single pass results in smaller textures with alphas which nicely sits in the cache.

On GeForce 6600 there are no precission issues at 256x256, but at 512x512 there are some errors in convolution slice selection which weren't suppressed even using rasterizer calibration. But there is not very much errors and the result is still pretty useful.

downloads

| version | release date | file | release notes |

|---|---|---|---|

| v0 | 2007-11-06 | gpu_ab_v0.zip | only works on single frame, mainly for validation purposes |

| v0 | 2008-01-06 | gpu_ab_v05.zip | only works on single frame, mainly for validation purposes |

| v1 | 2009-05-06 | abongpu_fixed_va_alpha.zip | ABOn plugin |